Optimizing My Site with Cloudflare Cache Rules: A Performance Journey

2025-05-07How I improved my website's performance running on React Router v7 (which evolved from Remix v2) deployed on Cloudflare Pages by optimizing Cloudflare Cache Rules for images, CSS/JS assets, and RSS feeds

For the past few months, I've been building and iterating on my personal site with React Router v7 (which evolved from Remix v2) deployed on Cloudflare Pages. Everything was working great - until I started looking at my network requests. To my surprise, most of my resources were marked as "DYNAMIC" in Cloudflare, which means they weren't being cached at all. What's the point of using a global CDN if everything gets served directly from the origin?

$ curl --silent -I https://kenwagatsuma.com/images/profile.webp | grep "cf-cache-status"

cf-cache-status: DYNAMIC

The Cache Conundrum

I discovered a painful truth - just because you deploy on Cloudflare doesn't mean your content automatically gets cached optimally. When I checked the response headers, I realized Cloudflare was not respecting my existing cache directives (Cache-Control defined in loader functions and header functions), which defaulted to "don't cache anything." Not ideal.

This behavior puzzled me at first, but after diving deeper into Remix's architecture, I understood the issue. React Router and Remix use a powerful loader pattern to fetch data on the server and client. When you navigate to a route, Remix does something clever:

- The initial HTML is server-rendered with the data from your loader

- When you navigate to another route client-side, Remix fetches data from your loaders via background API calls

- These background fetches don't include cache headers by default

Here's where things get tricky: Cloudflare seems to treat these background loader requests as regular API calls with no cache directives. So even though my content was relatively static (blog posts that rarely change), Cloudflare was treating every request as a dynamic, uncacheable API call. And for loaders responses, even though I configure Cache-Control directives, Cloudflare decides that these are dynamic responses and should not be cached. This was an "aha!" moment for me, as I realized how SSR fundamentally interacts with CDNs.

Cloudflare's default behavior seems to be conservative. This makes sense from a safety perspective (better to serve fresh content than stale), but it defeats the purpose of a CDN for sites with mostly static content if no explicit cache configuration is made.

Crafting Custom Cache Rules

After digging through Cloudflare's documentation, I realized I needed to create custom Cache Rules via Terraform to:

- Properly cache static assets (images, CSS, JS)

- Cache my RSS feed with appropriate freshness

- Balance edge vs. browser caching policies

Cloudflare's Cache Rules let you define exactly what content gets cached, for how long, and under what conditions. The setup wasn't trivial, but it was worth the effort.

The Image Cache Strategy

My first target was image files. Most images on my site rarely change, so caching them aggressively made sense. Here is a terraform resource for caching images:

resource "cloudflare_ruleset" "cache_rules" {

kind = "zone"

phase = "http_request_cache_settings"

rules {

action = "set_cache_settings"

action_parameters {

edge_ttl {

mode = "override_origin"

default = 43200

status_code_ttl {

status_code = 200

value = 86400 # Cache successful responses for 1 day

}

status_code_ttl {

status_code_range { from = 400, to = 599 }

value = 30 # Only cache errors for 30 seconds

}

}

browser_ttl {

mode = "override_origin"

default = 7200 # Browser cache for 2 hours

}

cache = true

serve_stale {

disable_stale_while_updating = false

}

}

// NOTE: The match operator is better to narrow down the cache rules to specific file types.

// However, the match operator is not available for Free plan users..

// expression = "(http.request.uri.path matches \"^/images/.*\\.(jpg|jpeg|gif|png|webp)$\")"

expression = "(starts_with(http.request.uri.path, \"/images/\"))"

description = "Cache image files"

}

}

I won't show you all the rules, but I created similar ones for RSS feed and CSS/JS assets (with different TTLs).

The critical part is not to cache error code status for long (see status_code_ttl for 4xx and 5xx). In some cases, for example I accidentally push source code without adding images first, images return 404 and that result is cached. If this is cached by the default long period, then users might see 404 for a long time, wondering if I had a typo in image URLs or I was stupid. In order to avoid that, I deliberately set a very short period (actually, it can be zero, if you want to make it more aggressive) to avoid such a situation.

By configuring this Cache Rule, I can finally get cf-cache-status: HIT for my image assets.

$ curl --silent -I https://kenwagatsuma.com/images/profile.webp | grep "cf-cache-status"

cf-cache-status: HIT

RSS Feeds Need Special Treatment

The RSS feed was trickier. It needs to be fresh, but I also wanted to avoid hitting my origin server for every request. I've wrote more in-details post about implementing RSS feed in another blog, but let me revisit in the context of Cache Rules.

I settled on a shorter cache TTL and implemented proper ETag support. You need to explicitly enable Respect Strong ETags for each Cache Rules to tell Cloudflare to respect ETag returned from backend origin servers.

rules {

// ...settings for RSS feed...

cache = true

serve_stale {

disable_stale_while_updating = false

}

respect_strong_etags = true

}

The key insight here was implementing proper ETags in my RSS generation code. This way, even when the cache TTL expires, Cloudflare can validate if the content has actually changed before fetching a fresh copy.

const rssXml = generateRssFeed(posts, channelData);

// Generate ETag based on content using SHA-256

const contentHash = await generateETag(rssXml);

// when ETag matches

const ifNoneMatch = request.headers.get('If-None-Match');

if (ifNoneMatch === contentHash) {

return new Response(null, {

status: 304,

headers: { "ETag": contentHash, "Cache-Control": "public, max-age=3600, s-maxage=28800, stale-while-revalidate=3600", }

});

}

// return ETag in 200 responses

return new Response(rssXml, {

headers: {

"Content-Type": "application/rss+xml",

"Cache-Control": "public, max-age=3600, s-maxage=28800, stale-while-revalidate=3600",

"Vary": "Accept",

"Last-Modified": new Date().toUTCString(),

"ETag": contentHash,

"Content-Length": String(new TextEncoder().encode(rssXml).length),

},

});

Finding the Right Balance

In my opinions, picking right cache strategies is hard, this is why you need a careful planning with deep-dive observation which is on top of foundational understanding of how each cache mechanism works (Cache-Control web standards, CDN platform, how origin servers return responses, how each building blocks get interwined for each other).

There's an art to choosing cache durations. Set them too long, and users might see outdated content. Set them too short, and you lose the performance benefits of caching.

I followed these principles:

- Edge cache (Cloudflare) longer than browser cache

- Shorter TTLs for dynamic content like RSS feeds

- Much shorter TTLs for error responses

- Leveraging stale-while-revalidate for the best of both worlds

The browser cache TTL is particularly important. I settled on 2 hours for images and 1 hour for everything else - long enough to improve performance during a browsing session but short enough that returning visitors will get fresh content if I've updated something.

Results: Cache Hit Ratio Dreams

After implementing these changes, my site's performance improved dramatically. The network tab now showed beautiful "HIT" status codes, and pages loaded noticeably faster, especially for visitors outside my region.

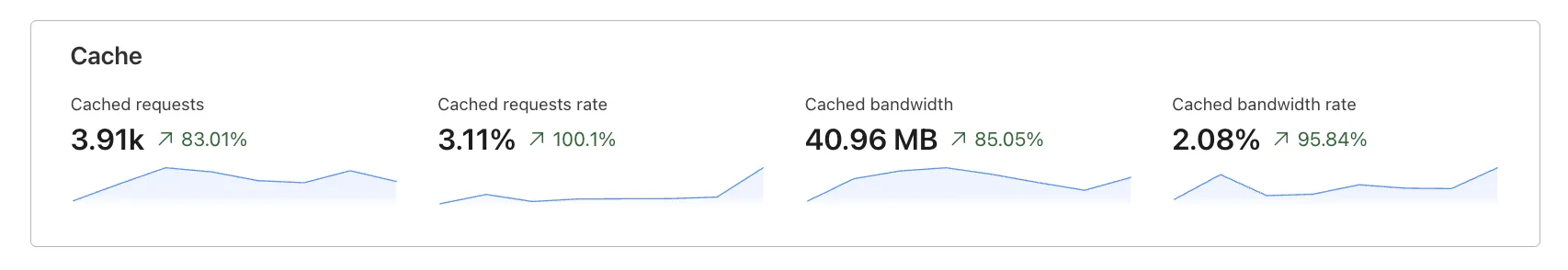

Cloudflare's analytics confirmed what I was seeing - my cache hit ratio went by above 83.01% for requests, serving 85.06% more bandwidth through caches, achieving 100.1% increase in Cached request rate. I love it.

Lessons Learned

This optimization journey taught me several lessons, but the top one is this: Don't assume defaults, measure by yourself. It seems pretty basic for me as a professional SRE at the workplace, but I have to put the same professional mindset in my weekend projects, too. Check what's actually happening with your resources

I also enjoyed to finding a delicate balance between freshness and performance, which required me to understand the foundation for each building blocks. Once I understand all of them and could properly configure caches and dramatically improved performances, it was a really rewarding moment.

Optimizing caching might not be the most exciting part of web development, but the performance improvements you get are definitely worth the effort. Plus, there's something incredibly satisfying about seeing those cache HIT statuses in your dashboard!