Strimzi: Kubernetes Operator for Apache Kafka and Migration Process

2021-06-29An in-depth case study of migrating from Confluent Cloud to self-managed Apache Kafka using Strimzi on Kubernetes, detailing the design process, implementation challenges, monitoring setup, and network access strategies for production deployment.

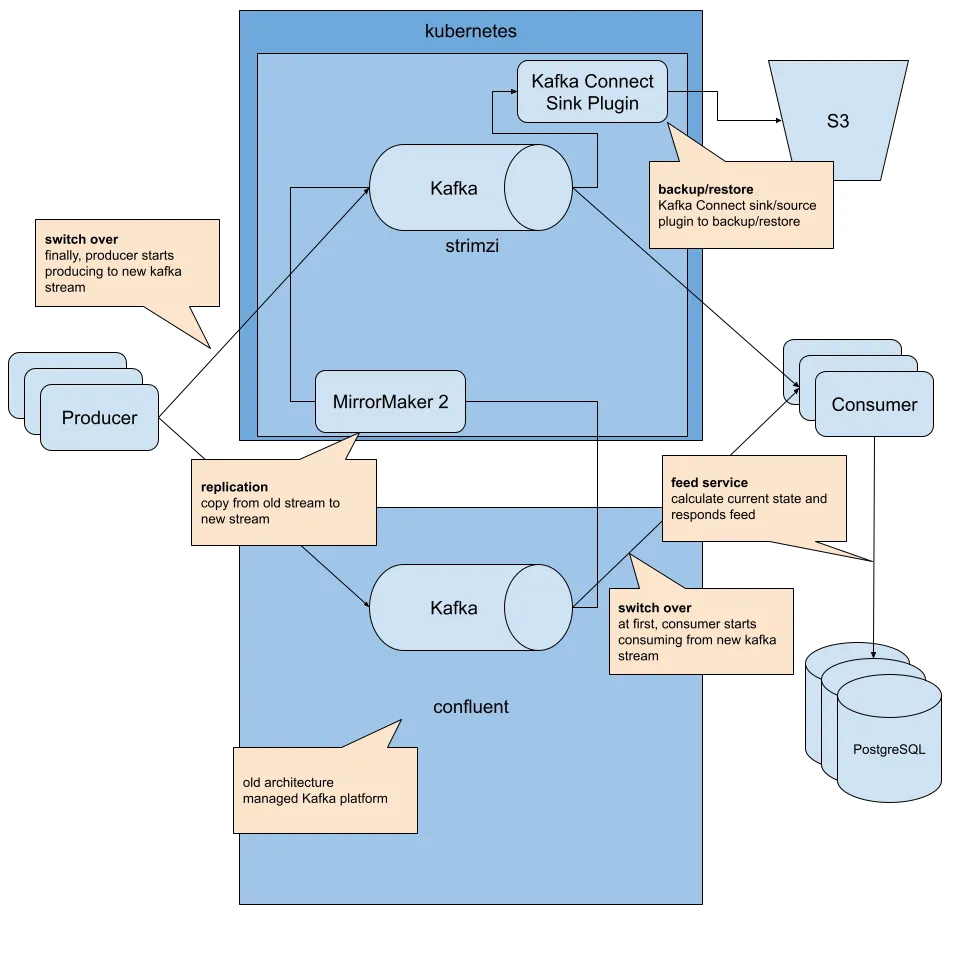

Recently, the Apache Kafka platform migration project I had been working on for nearly six months was successfully completed. Previously, we were using Confluent Cloud, a managed service. However, we transitioned to deploying a Kafka cluster on a Kubernetes cluster built on Amazon EKS using Strimzi.

This was a large-scale project that involved not only the web team but also search and machine learning teams. As an SRE team member, I was responsible for everything from design, PoC implementation, monitoring, and alert setup to the actual migration. Seeing the final production applications switch over was an emotional moment. We encountered several challenges due to our lack of experience with Kafka operations, but those challenges became valuable learning experiences.

Once the migration was complete, we celebrated at a local pub wearing our project T-shirts*1. There were various reasons and background factors behind our decision to move away from a managed service, which I hope to cover in a separate company blog post.

While the real operational challenges begin now, I would like to take this opportunity to reflect on Strimzi.

What is Strimzi?

Strimzi is a "Kubernetes Operator for running Apache Kafka." The Strimzi software suite is developed under the github.com/strimzi organization, with the core operator maintained at github.com/strimzi/strimzi-kafka-operator.

It was accepted as a CNCF Sandbox Project on August 28, 2019*2 and has significant contributions from Red Hat's core contributors/maintainers.

According to the blog post "Insights from the first Strimzi survey" published on September 29, 2020, over 40% of the 40 respondents were using Strimzi in production, with 25% running Kafka clusters on Amazon EKS, just like us.

What is a Kubernetes Operator?

A Kubernetes Operator is an architectural pattern that leverages Custom Resource Definitions (CRDs) to deploy and operate complex applications, such as Apache Kafka and Apache Zookeeper, within Kubernetes.

Deploying Apache Kafka on Kubernetes requires more than just Kafka itself; several subcomponents also need to be managed:

- Apache Kafka

- Apache Zookeeper

- Kafka Connect Cluster (if required)

- Kafka Mirror Maker (if required)

- Kafka Bridge (if required)

- RBAC (Role, ClusterRole, binding resources, etc.)

- Load Balancers

- Monitoring components/configuration (e.g., Prometheus JMX exporter)

- ACL components/configuration (e.g., user management)

Writing YAML files from scratch to deploy all of these components is nearly impossible unless you have extensive experience. There are also additional tasks like setting up networking, mTLS and client certificate distribution, persistent volume (PV) management, and topic configurations.

Strimzi abstracts much of this complexity by providing an API (expressed as CRD schemas), making Kafka cluster operations more manageable.

Limitations of Strimzi

Despite its advantages, Strimzi also has drawbacks. Features not supported by Strimzi cannot be used directly. For example, Kafka v2.8.0, released on April 19, 2021, introduced the long-awaited KIP-500, which eliminates the dependency on Zookeeper*3. However, Strimzi only added support for Kafka v2.8.0 on May 13, 2021, with v0.23.0. While a one-month turnaround is impressive, there is still an unavoidable delay.

Moreover, if there are bugs in the Operator itself, they impose limitations on operations. We sometimes had to read the Java source code of the Strimzi Operator to debug issues.

Despite these limitations, using Strimzi was still more reliable than managing all Kafka subcomponents via YAML manually. Given its adoption as a CNCF project and production use by other companies, as well as its promising future and maintainability, we decided to use Strimzi.

How We Built with Strimzi

We started with a Proof of Concept (PoC) in a sandbox cluster. Deploying the Kafka cluster was straightforward thanks to Strimzi CRDs. Next, we deployed Kafka MirrorMaker 2.0 (MM2) to transfer data from Confluent Cloud and validated its operation.

For monitoring and logging, we deployed JMX exporters and Prometheus servers in a separate namespace and integrated them with an existing production monitoring EKS cluster*4. This ensured seamless integration with existing monitoring and alerting systems.

For logging, we leveraged our internal EKS logging pipeline using Kinesis Streams, Kinesis Firehose, AWS Lambda, and Amazon Elasticsearch (AES). Fluentd was deployed as a DaemonSet in the same EKS cluster, using awslabs/aws-fluent-plugin-kinesis to push logs to Kinesis Streams. We enhanced searchability by enriching logs with Kubernetes metadata using fabric8io/fluent-plugin-kubernetes_metadata_filter.

We also created performance tooling based on Kafka Tools, specifically kafka-consumer-perf-test and kafka-producer-perf-test. Performance test definitions were written in YAML, deployed as pods, and results were converted into metrics using google/mtail for visualization in Prometheus/Grafana.

Finally, we deployed the production and pre-production clusters based on the PoC setup. The EKS clusters were managed using cookpad/terraform-aws-eks, which provided an opinionated API optimized for our use case.

Our Kubernetes CI/CD is still evolving, but currently, we use Jenkins/CodeBuild, with CI verifying changes using kubectl apply --dry-run --validate and kustomize build && kubectl diff. When changes are merged into master, kubectl apply is executed automatically.

How Do We Access Strimzi?

Strimzi provides several options for network access.

The first option is using NodePort *6. This method is highly maintainable and has almost no implementation cost since it does not require deploying additional processes. However, due to security concerns about exposing ports directly within the VPC and the lack of abstraction that comes with direct port access, we decided against it.

The second option is using Ingress *7. This involves running an Ingress service, such as an NGINX Ingress Controller. However, as mentioned in the referenced article, this approach has performance concerns. While it is not an immediate issue, we considered our target SLI/SLO and the long-term growth of our service and ultimately rejected this approach.

"So Ingress might not be the best option when most of your applications using Kafka are outside of your Kubernetes cluster and you need to handle 10s or 100s MBs of throughput per second."

The third option is using a LoadBalancer *8. We ultimately selected this approach. In this architecture, we deploy a separate Network Load Balancer (NLB) for each Kafka broker and an additional NLB for the Bootstrap Connection *9. Since NLBs generally provide sufficient performance and CloudWatch monitoring is available by default, this approach offers better maintainability compared to managing an Ingress Controller ourselves. The only drawback is infrastructure cost, but with three Kafka brokers and a total of four NLBs, our cost estimation remained within an acceptable range.

An alternative variation of this third option involves reusing a single NLB *10. While this approach reduces load balancer costs, it increases deployment complexity and maintenance overhead. Therefore, we opted against it.

Why Strimzi?

A fundamental decision in this migration was whether to use a managed service or operate our own Kafka cluster.

For managed services, Confluent Cloud and Amazon MSK were strong contenders. To be clear, Confluent Cloud is an excellent service. If we had chosen to continue using a managed solution, Confluent Cloud would have remained our top choice due to its strong support and high-quality alternative services such as Confluent Replicator.

However, the primary reason for our migration from Confluent Cloud to Strimzi was a company-wide decision to operate our own Kafka cluster. As our core application increasingly relied on Kafka, its importance grew. We wanted greater flexibility in data backups, Kafka cluster configurations, metrics collection, and networking architecture. Despite the additional maintenance and implementation costs, we were motivated to accelerate the adoption of an event-driven architecture centered around Kafka.

Given our team's strong Kubernetes expertise and our long-term platform strategy, selecting AWS EKS was a natural choice. We chose Strimzi because, as mentioned earlier, the benefits of using the Operator Pattern outweighed its drawbacks.

We also considered Confluent Operator, but it requires an enterprise subscription. Nonetheless, I would love to see its implementation someday.

Conclusion

This article reviewed our migration to Strimzi. Although I initially intended to keep it brief, it became a lengthy reflection on a meaningful project.

Beyond gaining technical insights into Kafka and Strimzi, this project provided invaluable experience. Working closely with our Global SRE team, we had to collaborate intensively under tight deadlines. I have immense respect for my colleagues who contributed to this project. Having dependable teammates to rely on, receiving sharp insights from unexpected perspectives, and working alongside a tech lead who maintained a positive atmosphere—even during Friday night incidents—made this an incredible experience. I look forward to the operational knowledge we will continue to accumulate.

*1: A novelty T-shirt proposed by an SRE and other members to boost morale during the project, which was actually ordered.

*2: https://www.cncf.io/sandbox-projects/, https://strimzi.io/blog/2019/09/06/cncf/

*3: However, note that production use is not yet recommended.

*4: Thanos is used as a long-term storage solution for Prometheus, along with Alertmanager and Grafana.

*5: Information on pods and nodes.

*6: https://strimzi.io/blog/2019/04/23/accessing-kafka-part-2/

*7: https://strimzi.io/blog/2019/05/23/accessing-kafka-part-5/

*8: https://strimzi.io/blog/2019/05/13/accessing-kafka-part-4/

*9: In Kafka, both producers and consumers communicate directly with Kafka brokers that hold the partitions they need to read from or write to, using a custom Kafka binary protocol at the TCP layer. However, before doing so, clients must first perform node discovery using the Metadata API to determine which brokers hold the relevant partitions. While all brokers can return this metadata, clients need to query at least one broker. This initial connection serves as the entry point.

*10: https://strimzi.io/blog/2020/01/02/using-strimzi-with-amazon-nlb-loadbalancers/