AWS DynamoDB Accelerator (DAX) Revisited

Recently, I contributed an article to the company blog titled "Introduction of DynamoDB Accelerator (DAX) Use Case in Ad Delivery Servers." The article introduced the use case of DAX, including points to consider when selecting the technology, the results of its utilization, and the experiences surrounding its operation and maintenance.

https://techlife.cookpad.com/entry/dynamodb-accelerator-usecase-adserver

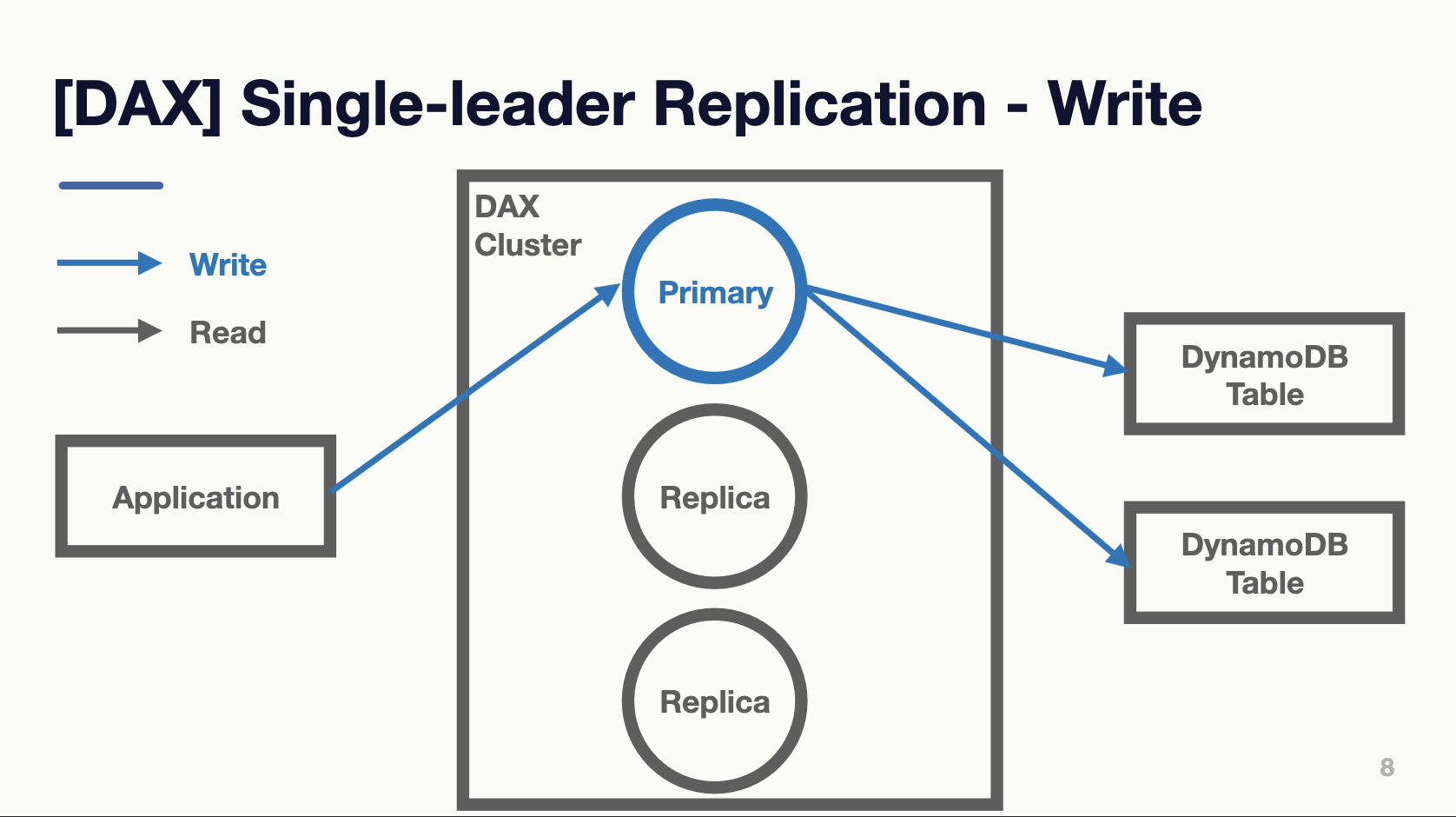

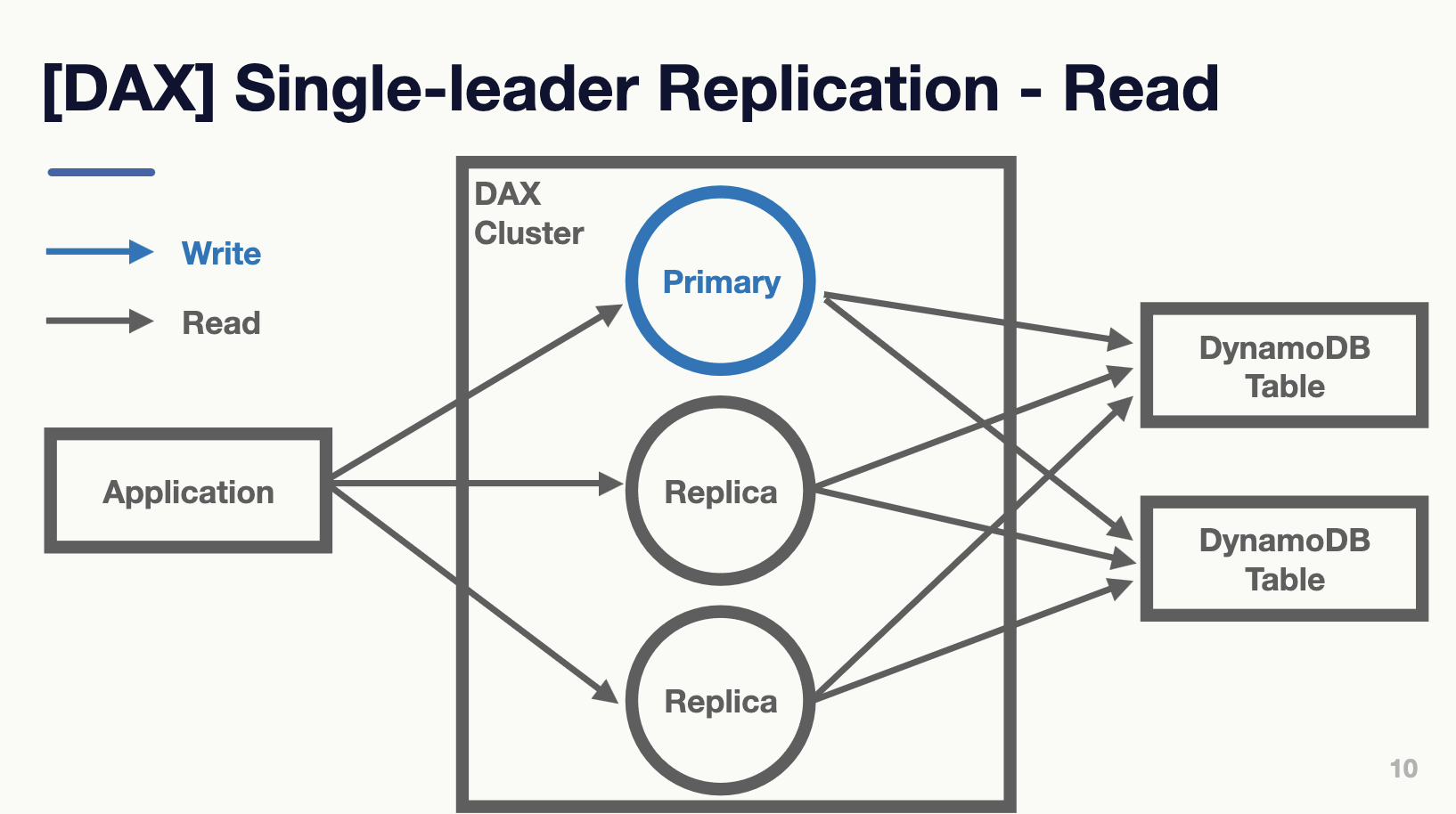

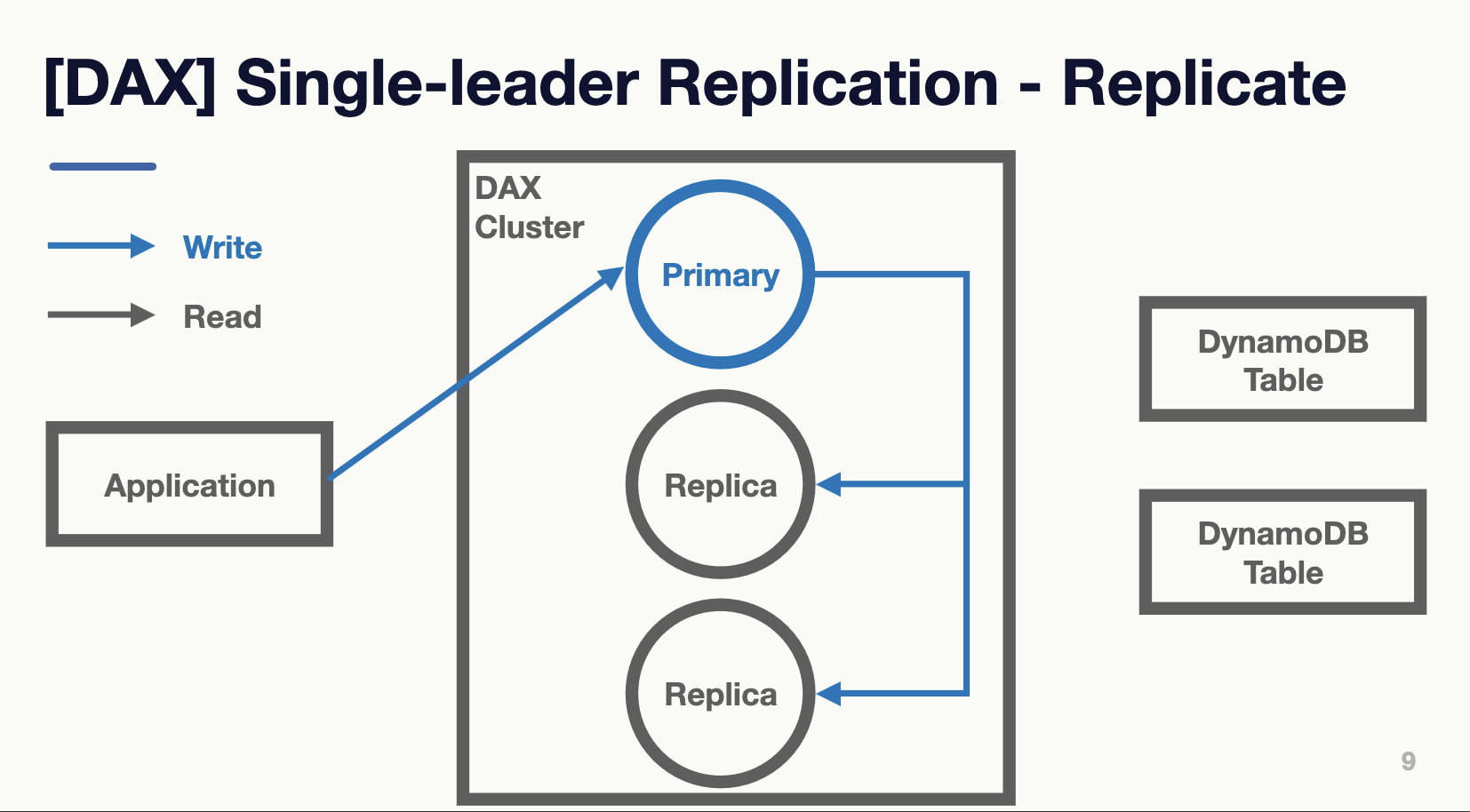

In short, DAX is an "in-memory cache store exclusively for DynamoDB." DynamoDB itself imposes various constraints on users (e.g., query operation limitations, indexing methods, transactions, eventual consistency, etc.) in exchange for providing a valuable high-performance NoSQL option that can scale writes. Similarly, DAX imposes various constraints on users (e.g., Global TTL, Single-leader, SDK quality, etc.) in exchange for offering a high-performance read workload option, as mentioned in the blog.

Therefore, if the application's requirements match, the combination of DynamoDB + DAX can be a good option that scales both reads and writes while keeping infrastructure costs low.

The reason infrastructure costs can be kept low is that DynamoDB charges based on either On-Demand or Provisioned capacity. For On-Demand, charges are based on the number of requests, and for Provisioned, charges are based on the consumption of Read Capacity Units (RCU) or Write Capacity Units (WCU). In scenarios with heavy read workloads, if all requests go to DynamoDB, you might end up paying more than expected regardless of the pricing model. Hence, from a certain volume of requests, setting up a DAX cluster to cache data becomes more cost-effective overall.

Of course, if the goal is simply to reduce infrastructure costs, the same can be achieved with other cache stores. You can use ElastiCache (Redis/memcached), in-memory solutions, or any preferred option.

Therefore, in many cases, the flow might be: "First, we implemented DynamoDB and launched the service. As the service grew, the number of read and write requests increased, leading to higher infrastructure costs. Given the high performance requirements for read workloads, we decided to introduce DAX to reduce infrastructure costs while improving latency."

In our case, we included DAX from the initial implementation of the application. This decision was based on past experiences with DynamoDB in ad delivery servers, where latency became unstable as RPS increased, and the performance requirement was quite challenging at p95 of 15ms. Hence, we decided to include DAX from the start.

Another requirement is the lack of visibility into the working sets stored in DAX instances and the access patterns for each partition key. While this information is generally unnecessary and not having to worry about it is proof of a simple design, there are times, such as during incidents, when this data might be needed. CloudWatch Contributor Insights for DynamoDB is currently implemented as a preview feature but is not yet available for DAX. Personally, I hope that equivalent functionality will be implemented for DAX.