Mcrouter

When you want to cache data in-memory temporarily, a well-established and reliable technology you can use is Memcached. It is not as feature-rich as Redis but is simpler, with a straightforward protocol. The client implementations for various programming languages are also mature, making it easy to deploy in production environments. There are quite a few cases where a Memcached cluster is introduced to alleviate performance bottlenecks in backend systems like MySQL or PostgreSQL.

Even a single instance can scale reasonably well, and with the evolution of managed services like AWS Elasticache for Memcached, it is easy to implement quickly. Up to a certain scale, that is.

Mcrouter is a service originally introduced in a paper titled "Scaling Memcache at Facebook" by Facebook (now Meta). Facebook needed to handle 500 million Requests Per Second (RPS) during peak times for services like Facebook and Instagram, necessitating numerous innovations in their caching platform (no matter how much you optimize the RDBMS layer, it can't handle this scale).

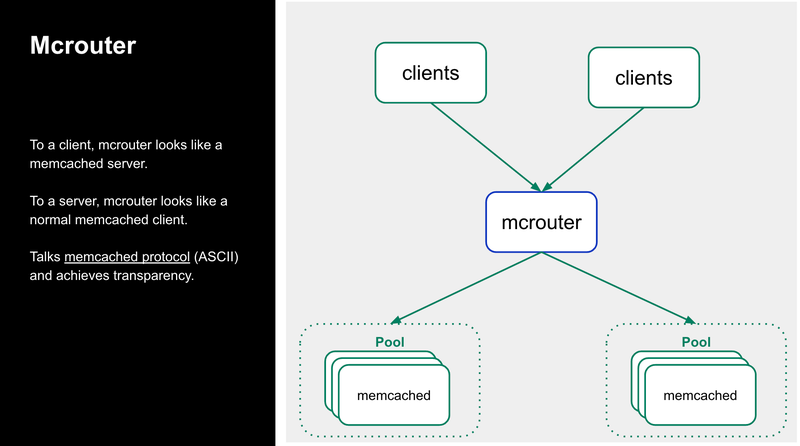

Mcrouter is essentially a sophisticated proxy that understands the ASCII protocol of Memcached and includes various features. It is developed under facebook/mcrouter.

From the client's perspective, it looks like a server, and from the server's perspective, it looks like a client, making it transparent. In other words, it does a good job without requiring you to add custom implementations or forks to the Memcached servers—just place it in between.

To improve scalability and fault tolerance, features like connection pooling, flexible prefix routing setup, transparent large values composition, and auto failover are implemented.

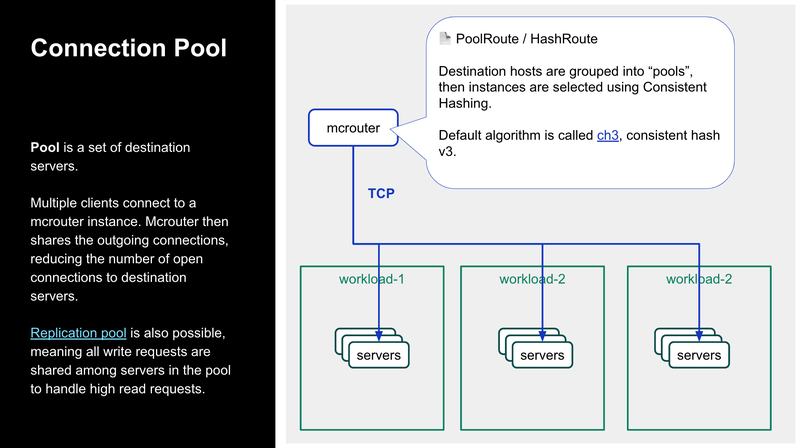

For example, one of these features is the Connection Pool. This manages multiple Memcached instances as a "pool." All mappings are contained within Mcrouter, and it routes efficiently using Consistent Hashing. This feature is not particularly rare.

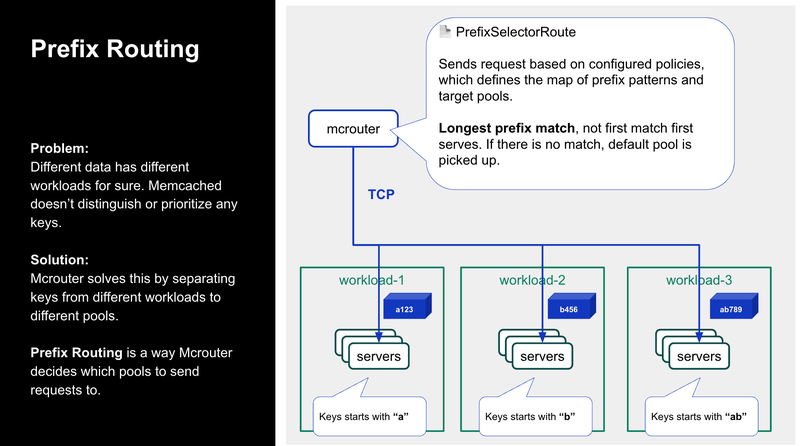

Routing can also be configured flexibly. For instance, Prefix Routing routes based on the longest prefix match of an object's key. This allows you to allocate pools with different capacities according to the workload. Specifically, you can assign "Page Cache for Rails to Workload 1" or "ActiveRecord Cache to Workload 2." By changing the instance sizes and settings assigned to the pools, you can aim for further performance improvements by allocating the optimal pool for each workload.

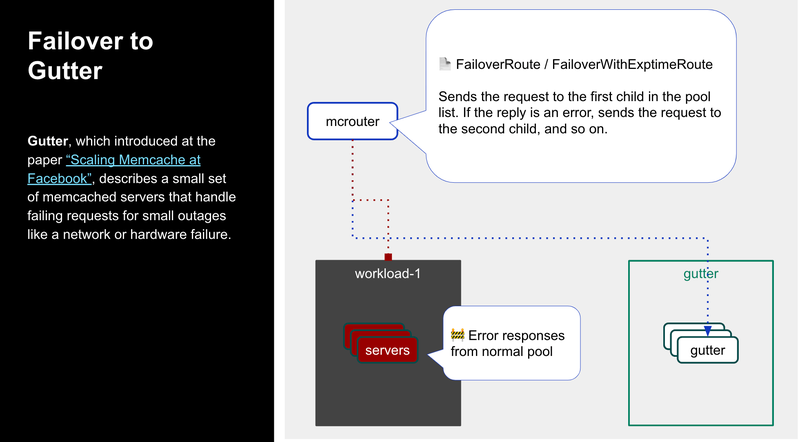

There is also a failover mechanism for handling temporary issues like network or hardware failures that prevent a pool from processing requests. When an error response is received, it temporarily proxies the request to a pre-specified fallback destination. Although simple, you can use a special pool called a Gutter server, as introduced in the original paper. While it can't handle platform-wide failures, it is robust against smaller scope issues, including maintenance mishaps. Thanks to the Gutter server, unnecessary load on the backend database is avoided even during emergencies.

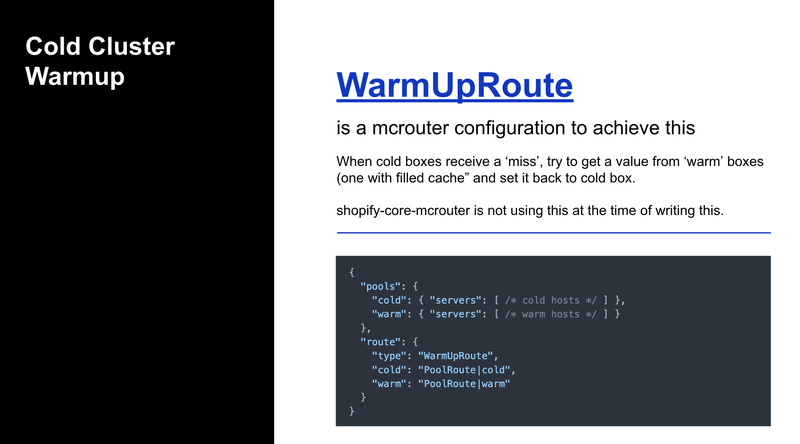

One of the issues when dealing with caches is the Cold Cache problem. Essentially, when an instance is first launched, nothing is cached, so all requests to that instance result in Cache Misses. Consequently, all requests go to the backend database to fetch the original data. Depending on the application's workload, business requirements, or the worst-case scenario of deployment and rollout timing, the backend may become overloaded and cause failures. One simple solution is to "warm up" the Cold Cache before deploying it to production. Mcrouter handles Cold Cache warming effectively.

Internally, Mcrouter is implemented in C++11. It is multi-threaded, with one thread per core. It is designed to be satisfactory for deployment in multi-core environments. Using fibers, it manages scheduling internally rather than relying on the OS. The number and size of fibers can be configured, allowing for tuning based on production performance.